At CES 2026, NVIDIA CEO Jensen Huang delivered a globally watched AI keynote. He outlined the future direction of AI infrastructure before an audience of government officials, technology leaders, enterprises, media, and investors worldwide, supported by real-time interpretation delivered by Flitto. Widely regarded as one of the most influential moments of the event, Jensen Huang’s CES 2026 AI keynote served as a strategic signal for where AI infrastructure, investment priorities, and industry alignment are likely to move over the next one to three years

👉Watch Jensen Huang’s CES 2026 AI Keynote

In this year’s address, Huang presented NVIDIA’s latest vision for AI infrastructure, spanning agentic AI, system-level architecture, next-generation GPUs, high-performance networking, and data center design. The keynote ran for over an hour, drawing thousands of attendees on site and reaching millions more globally through live media broadcasts, underscoring both its scale and its significance within the global AI ecosystem.

Why Accuracy Matters in AI Keynote Interpretation

When a keynote of this magnitude is not conveyed accurately, the consequences can be significant:

- Technical meaning may be over- or under-interpreted, distorting the true implications of the technology

- Policy and investment decisions may be shaped by misunderstanding rather than intent

- Media coverage may focus on superficial details, missing the core message

In high-impact AI discourse, precision is is essential.

Delivering accurate real-time interpretation of AI keynotes is uniquely challenging for three main reasons.

1. It Is Not a Vocabulary Problem

Understanding AI explanations requires far more than translating individual words. Concepts such as agentic AI, context memory, or system-level acceleration only make sense when their technical roles and relationships are fully understood.

2. Live Speech Is Fragmented and Nonlinear

In live keynotes, Jensen Huang frequently:

- Switches direction mid-sentence

- Refers to slides or hardware on stage using “this,” “it,” or “here”

- Moves rapidly between vision, implementation, and demonstration

Without contextual understanding, meaning can easily break down.

3. Numbers and Structure Change Meaning

In AI infrastructure discussions, numbers, units, and relationships define scale and feasibility. Misinterpreting a figure, a ratio, or a system relationship can fundamentally change what the audience believes is being described.

How Flitto LT Handled the Most Difficult Moments of the Keynote

During Jensen Huang’s CES keynote, Flitto’s live translation (LT) solution was used to deliver real-time AI interpretation through media broadcast channels.

Three specific segments of the keynote illustrate Flitto LT’s technical strength particularly well. Each represents a different category of difficulty.

Case 1: Preserving Core Meaning in High-Complexity Conceptual Explanations

“We’re going to integrate CUDA-X, physical AI, agentic AI, NeMo, and Nemotron deeply into the world of Siemens. And the reason for that is this. First, we designed the chips, and all of it in the future will be accelerated by NVIDIA. We’re going to have agentic chip designers and system designers working with us, helping us design, just as we have agentic software engineers helping our software engineers code today.”

This passage combines abstract concepts (agentic AI), proprietary platforms (CUDA-X, NeMo, Nemotron), and forward-looking system design logic, delivered in a fragmented, improvisational speaking style typical of live keynotes. Accurately interpreting such a segment requires more than linguistic fluency, it demands an understanding of how these concepts relate within NVIDIA’s broader AI strategy.

Flitto’s live translation preserved the core conceptual intent and logical flow of the original speech in real time. Rather than flattening the explanation into generic terms or losing the causal relationship between chip design, acceleration, and agentic collaboration, the interpretation clearly conveyed why NVIDIA is embedding these technologies into Siemens’ ecosystem and how agentic AI extends beyond software into chip and system design itself. This ability to maintain meaning in a high-complexity conceptual segment illustrates Flitto LT’s strength in delivering not just translated words, but coherent technical understanding to a global audience.

Case 2: Delivering Hardware-Scale Precision Without Losing Meaning

👉Hardware Specifications and Scaling (17:03)

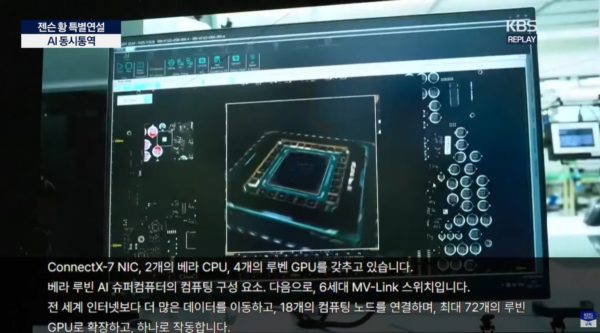

Another defining strength of Flitto LT was demonstrated during the most specification-dense portion of Jensen Huang’s keynote, where he described the physical building blocks of NVIDIA’s next-generation AI supercomputing systems. In this segment, Huang stated:

“Featuring a BlueField-4 DPU, eight ConnectX-9 NICs, two Vera CPUs, and four Rubin GPUs, the compute building block of the Vera Rubin AI supercomputer. Next, the sixth-generation MVLink switch, moving more data than the global internet, connecting 18 compute nodes and scaling up to 72 Rubin GPUs operating as one. Then Spectrum-X Ethernet Photonics, the world’s first Ethernet switch with 512 lanes and 200-gigabit co-packaged optics, scaling thousands of racks into an AI factory.”

This passage presents an extreme challenge for real-time interpretation. Proprietary hardware names, rapidly delivered numerical specifications, and multi-layered system relationships are tightly packed into a continuous flow of speech. Any misinterpretation, whether in component counts, scaling ratios, or functional relationships, would fundamentally alter the technical meaning of the system being described.

Flitto LT successfully preserved hardware accuracy and structural clarity throughout this segment. The live interpretation correctly conveyed component quantities, performance claims, and scaling logic, such as the relationship between 18 compute nodes and 72 GPUs operating as a single system, while maintaining the coherence of the broader architectural narrative. Rather than fragmenting the explanation into disconnected specifications, the interpretation delivered these details as an integrated system description, enabling viewers to grasp not just what components were introduced, but how they work together at scale. This ability to handle dense hardware specifications in real time highlights Flitto LT’s strength in delivering technically reliable information where precision is non-negotiable.

Case 3: Preserving System-Level Reasoning in Real-Time Architecture Explanations

👉System Architecture and Bottlenecks (38:29)

Flitto LT’s third core strength became evident during a system-architecture-heavy segment of the keynote, where Jensen Huang explained one of the most pressing infrastructure challenges facing large-scale AI deployment today: KV cache movement and its impact on network traffic. In this portion of the talk, Huang stated:

“There’s a whole new category of storage systems, and the industry is so excited because this is a pain point for just about everybody who does a lot of token generation today. The AI labs, the cloud service providers, they’re really suffering from the amount of network traffic caused by KV cache moving around. And so the idea that we would create a new platform, a new processor, to run the entire Dynamo KV cache context memory management system and to put it very close to the rest of the rack is a computing revolution.”

This segment poses a unique challenge for real-time interpretation because it is not a description of a product, but an explanation of why an architectural shift is necessary. The speaker moves from industry-wide pain points to root causes, and then to a system-level solution, all while using abstract references (“this,” “it,” “here”) and unfinished sentence structures typical of live technical demonstrations.

Flitto LT accurately preserved the problem–cause–solution logic throughout this explanation. The live interpretation clearly conveyed that the issue was not merely storage performance, but excessive network traffic generated by KV cache movement in token-heavy workloads; that the proposed response involved a new processor platform dedicated to context memory management; and that its physical placement near the rack was central to the solution. Rather than fragmenting the explanation into isolated technical terms, the interpretation maintained the architectural narrative as a coherent whole, enabling viewers to understand why this design represents a fundamental shift in AI system architecture. This ability to sustain system-level reasoning in real time demonstrates Flitto LT’s strength in delivering complex AI infrastructure insights where conceptual accuracy is as critical as technical detail.

What These Three Cases Show Together

Taken together, these three segments demonstrate a single, clear strength:

Flitto LT can accurately interpret AI keynotes in real time across conceptual vision, hardware-scale precision, and system-level architecture.

This capability goes beyond fluent language conversion. It enables complex AI information to be delivered as understandable, decision-grade knowledge to global audiences.