Since its founding in 2012, Flitto has been building language datasets essential for AI training for more than a decade. (👉[click] See How Flitto Powers AI Models with High-Quality Data)

While the emergence of ChatGPT has made AI feel familiar and accessible to the general public, the role and performance of AI were far more limited ten years ago. Skepticism toward AI’s long-term potential was not new; historically, artificial intelligence has gone through periods of heightened doubt, often referred to as “AI winters.” Despite such uncertainty, Flitto consistently believed in the future of AI and focused on one foundational truth: AI performance ultimately depends on the quality of its training data.

As a result, Flitto’s datasets have drawn attention not only from AI companies in South Korea, where its headquarters is located, but also from global AI enterprises. In 2025, Flitto was awarded the USD 7 million Export Tower, recognizing its achievements in overseas data exports. While many companies in South Korea engage in AI dataset construction, only a small number have successfully expanded their data business to international markets. This milestone reflects the global recognition of Flitto’s dataset quality and reliability.

Flitto also plays a central role in a national government project aimed at building a proprietary Korean foundation model (K-AI), where it leads dataset construction as part of a consortium with Upstage. Upstage, widely recognized for its AI capabilities through the SOLAR series, collaborated with Flitto to develop and publicly release a large-scale LLM, Solar Open 100B, built entirely from scratch. This project represents a significant step forward in Korea’s foundation model ecosystem, with Flitto responsible for the underlying data infrastructure.

With this background, let us take a closer look at how Flitto has built its datasets over time.

1. Text Datasets Centered on Parallel Corpora

One of the most well-known machine translation services today is Google Translate. While machine translation technologies existed decades ago, they supported only a limited number of languages and typically required separate software installations. Translation quality was sufficient for rough comprehension but lacked the accuracy and fluency needed for practical use.

In 2006, Google released Google Translate as a web-based service, eliminating the need for dedicated software and making machine translation accessible to anyone with a web browser. Despite early limitations in translation quality, its convenience and broad accessibility led to rapid global adoption.

While many companies and research institutions have worked to develop and improve machine translation systems, the most persistent challenge has been the lack of large-scale, high-quality parallel corpora. Effective training requires vast amounts of accurately translated multilingual data, yet producing such data manually across multiple languages is both time-consuming and costly.

Since its founding in 2012, Flitto has addressed this challenge through a crowdsourced platform, where users contribute multilingual translations and other users subsequently review and validate them. This multi-layered verification process enabled Flitto to rapidly collect large volumes of high-quality parallel corpora and commercialize them at scale.

As machine translation providers expanded language coverage incrementally, starting with widely used languages and gradually adding more, Flitto grew alongside them by supplying the necessary datasets. Today, parallel corpora remain one of Flitto’s core dataset offerings.

What is a Parallel Corpus?

A parallel corpus refers to a dataset in which sentences with the same meaning are aligned in pairs across two or more languages. For example, a Korean sentence matched precisely with its corresponding English or Japanese translation forms a parallel pair. Such datasets play a critical role in training machine translation models, enabling them to learn how expressions in one language correspond to those in another.

2. Speech Datasets for Speech Recognition

Over the past several years, AI speakers and voice-enabled assistants have become widely adopted, both as standalone devices and as built-in smartphone features. These AI systems are capable of understanding spoken language, allowing users to interact without typing, a convenience that often feels intuitive yet technologically complex.

Just as parallel corpora are essential for machine translation, speech recognition requires paired datasets consisting of human speech audio and corresponding text transcripts. When speech is recorded, it can be analyzed through features such as frequency and amplitude, which vary by phoneme. By learning the relationship between these acoustic features and written text, speech recognition models become capable of understanding spoken language.

Pronunciation can vary significantly depending on factors such as gender, age, and regional background. Even the same sentence can produce noticeably different acoustic patterns depending on the speaker. To ensure robust performance across real-world conditions, speech datasets must include recordings from many different speakers rather than relying on a single voice.

The Importance and Challenges of Speech Data

Speech is often the first interface through which AI interacts with humans in real-world environments. As such, speech recognition performance is a critical determinant of overall AI service quality. Unlike text, which users typically input in a deliberate and structured manner, speech naturally includes hesitation, intonation, speaking speed, and emotional nuance. Without accurately capturing these elements, AI systems struggle to respond effectively in real situations.

A key challenge is that speech data cannot be synthetically generated at scale without losing realism. High-quality speech datasets must be collected from real people speaking naturally in diverse environments. Because the same sentence can produce vastly different signals depending on the speaker and context, speech recognition models require large-scale, high-quality datasets reflecting real-world variability. For this reason, speech data has long been considered one of the most important, and most difficult, types of data to build for AI training.

Building on its experience with text data, Flitto began collecting speech data through its crowdsourced platform in 2015. The company has gathered voice data not only in Korean, English, Chinese, and Japanese, but also in European and Southeast Asian languages. These datasets were used to train the speech recognition engine deployed in the 2018 PyeongChang Winter Olympics speech recognition application.

Because speech recording requires only native language proficiency rather than multilingual skills, it significantly lowered participation barriers and contributed to the rapid growth of Flitto’s global user base. Many of these users later participated in other dataset projects, including text and image data collection, making the crowdsourcing platform one of Flitto’s most valuable long-term assets.

3. Datasets for LLM Training

In late 2022, ChatGPT was released with little initial publicity, yet it quickly spread through word of mouth on social media. Within just five days, it reached one million users, an unprecedented adoption speed compared to platforms such as Facebook or Instagram.

Whereas traditional AI models were typically designed to perform a single task, such as translation, summarization, or optical character recognition, LLMs (Large Language Models) integrate multiple capabilities into a single model. Their performance significantly surpasses that of earlier AI systems, and the public release of open foundation models initially posed a competitive threat to companies that had long focused on AI research.

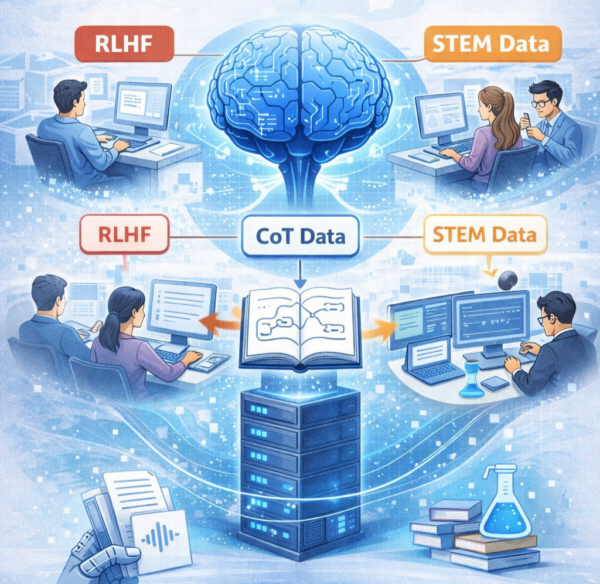

For Flitto, which had been supplying datasets to AI model developers, the rapid advancement of LLMs initially appeared to be a challenge. LLMs contain vastly more parameters than earlier task-specific models and require datasets that are not only larger but also more diverse. New data categories emerged to support LLM performance, including RLHF (Reinforcement Learning from Human Feedback), Chain-of-Thought (CoT) data, and high-difficulty STEM datasets. At the same time, AI companies increasingly relied on fine-tuning foundation models, creating demand for smaller but highly specialized, high-quality datasets tailored to LLM characteristics.

Flitto proactively responded to these changes by expanding its dataset portfolio to meet emerging LLM training needs. By supplying these datasets to major global AI companies, Flitto contributed directly to model performance improvements, efforts that culminated in the USD 7 million Export Tower award and Flitto’s role in supporting the development of a national foundation model.

This concludes an overview of how Flitto has built its datasets over time. In the next installment, we will take a closer look at Flitto’s data creation methodology and quality control processes that enable consistently high-quality AI training data.