Accent recognition is a crucial component of speech recognition software, as different regions have distinct ways of speaking the same language. For example, the English language is spoken by billions worldwide, yet even native speakers might struggle to understand one another due to regional accents.

In this article, we’ll explore what accent recognition is, why it matters for AI systems, and showcase specific examples of it in action.

What is accent recognition in AI?

Accent recognition refers to an AI system’s ability to accurately interpret speech despite variations based on a speaker’s regional or cultural background.

AI systems are often trained on “standard” versions of a language. However, this standard form is just one part of the broader linguistic spectrum. Different dialects and accents exist, and accent recognition technology is essential for helping AI understand them.

For instance, we perceive British, American, Australian, and Indian English accents as distinct. For speech recognition software, these differences in articulation pose significant challenges. Training AI to recognize such variations requires vast amounts of data.

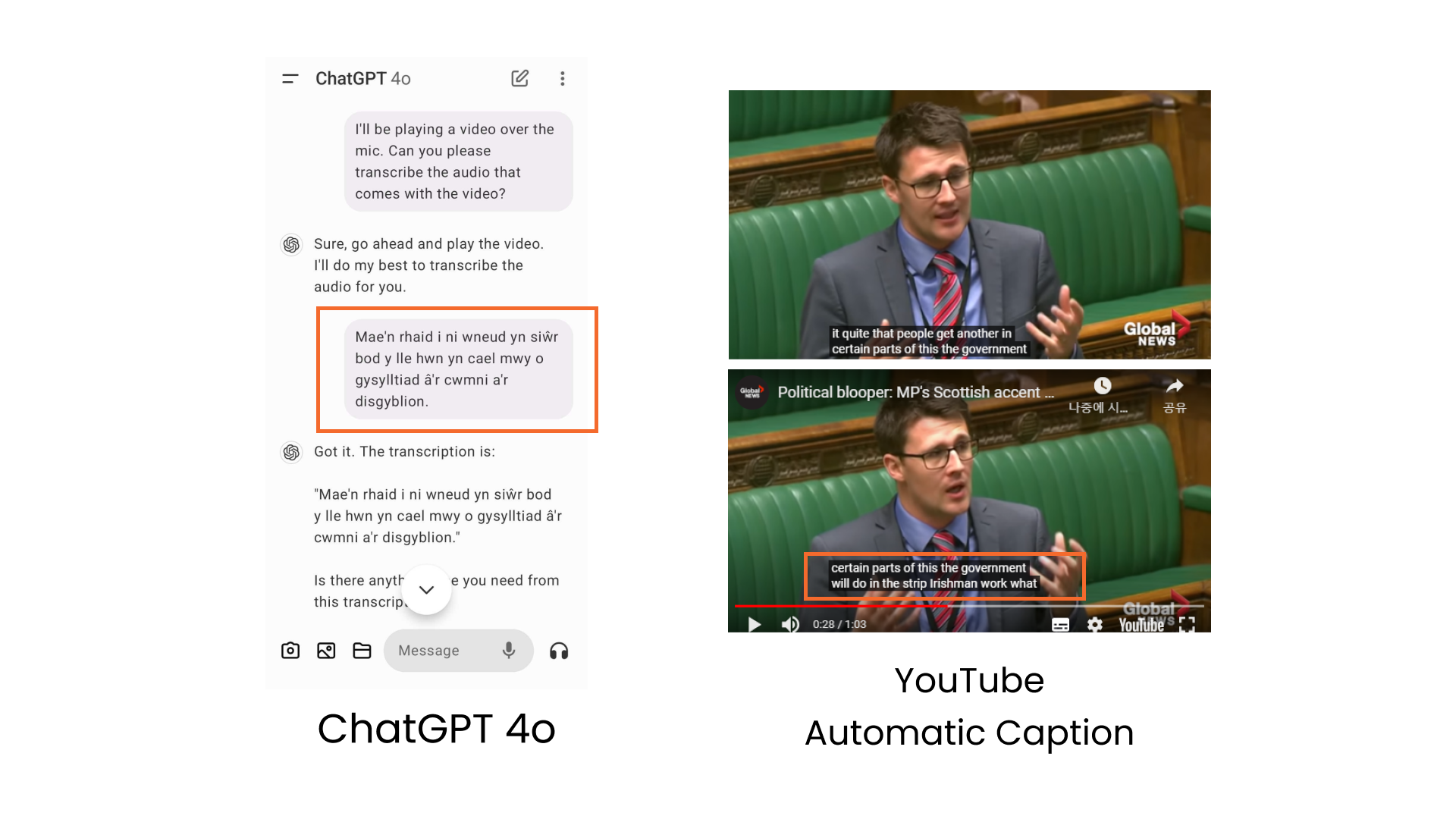

As an example, we played the viral clip of a Scottish Parliamentarian with a strong accent to ChatGPT-4o and YouTube automatic captioning function. (ChatGPT-4o recognized the speech as Scottish Gaelic.)

Why is accent recognition important for AI systems?

Speech-enabled devices are becoming more widespread globally. Because of this, effective speech AI functions, such as voice commands, virtual assistants, and note-taking, are increasingly vital.

Service providers often focus on offering multilingual support, but ensuring the system is inclusive of accents is equally important.

Common accents like American or British English are generally well-supported. Nonetheless, handling low-resource accent recognition—accents with less data available for training—requires advanced AI models and large amounts of speech datasets to avoid bias and ensure inclusivity.

Ultimately, accented speech recognition technology powered by AI data will enable better communication, improve accessibility, and provide a more personalized experience for users everywhere.

How to train AI models to understand diverse accents

Speech AI systems must be trained to handle diverse accents effectively to provide an inclusive user experience.

The heart of any successful accented speech recognition system is AI data. Large-scale, diverse datasets are crucial in helping machine learning models understand and process the subtleties of various accents.

Improving accent recognition with AI data

To create effective speech recognition software, systems need access to audio data samples representing various accents. AI algorithms rely on extensive datasets containing spoken words, sentences, and phrases from speakers across different regions. The more diverse the dataset, the better the model becomes at recognizing and understanding speech from individuals with different accents.

AI models can sometimes show bias toward certain accents, resulting in better performance for widely recognized accents while struggling with others. To combat this, developers need access to varied datasets that reflect multilingual or multicultural environments.

Voice technology is becoming more integrated into everyday life in the form of virtual assistants, smart devices, and customer service systems. AI data enables these systems to adapt dynamically, learning from every interaction and improving over time.

Why high-quality data is key

Without high-quality, well-annotated AI data, accent recognition systems struggle to achieve accurate results. Low-quality data or insufficient representation of accents in training datasets can lead to failures in understanding or misinterpretations of speech.

Risks of poor AI speech recognition system

- Miscommunication – The system failing to recognize words or phrases leads to inaccurate output, which can potentially cause misunderstanding between users.

- Bias – Favoring dominant accents, reducing accuracy for less common or regional accents.

- Poor user experience – Users may get frustrated when their speech isn’t recognized correctly, lowering trust in the technology.

- Limited global reach – The usage of insufficient speech recognition systems that cannot handle diverse accents restricts international market expansion.

- Accessibility issues – Users with accents who also rely on voice recognition in critical areas like healthcare or education will experience limited functionalities.

- Increased human intervention – More manual corrections are needed, lowering system efficiency and increasing costs.

To avoid these risks, the AI data must consider three factors: Data quantity and quality, and real-world applications.

Both the volume and quality of the data fed into AI models impact the performance of accent recognition systems. Large datasets help AI recognize patterns and differences in speech, while high-quality, annotated data ensures precision in learning.

At the same time, an AI-powered speech recognition software must be able to perform in real-world environments with background noise, varying speech speeds, and spontaneous language changes. With AI data that mirrors these conditions, accent recognition systems become more resilient.

Real-life use case of advanced accent recognition AI

As AI technology continues to evolve, Advanced accent recognition AI systems are opening up exciting possibilities.

Real-time AI interpretation services

In international events like conferences and seminars, bilingual speakers tend to choose to speak in English. This is why AI interpretation services need to ensure they can understand regional English accents.

By training on language data powered by millions of users worldwide, there are effective AI interpretation services that can seamlessly understand strong accents, like Flitto’s Live Translation.

We tried playing the same viral clip above to Flitto Live Translation. Aside from Scottish accents, It is able to understand other accents in English, even the accents of English-as-a-second-language speakers from France, India, and more.

Conclusion

In achieving an effective AI speech recognition system, the power of accurate and scalable AI data cannot be understated. Advanced systems that use AI data from millions of speakers globally will lead to more accurate, inclusive, and unbiased speech recognition systems. As diverse accents are recognized in AI speech recognition systems, more tools will be inclusive, accessible, and effective for everyone. This way, accented speech will no longer be a barrier for individuals using AI services.