Part 2 of The European Chatbot and Conversational AI Summit 2023–3rd Edition series

Conversational AI and chatbots are now familiar technologies for many, businesses and consumers alike. When leveraged aptly, these automated customer service mechanisms can bring powerful results. These can include heightened convenience and cost reduction.

Some enterprises have particularly demonstrated a skillful integration of conversational AI. The successful Ai integraters have presented their successful use cases in The European Chatbot and Conversational AI Summit 2023.

In this article, Flitto DataLab has compiled three instances where large language model (LLMs) chatbots have provided values to their customer service.

Multi-Channel, Multi-Language Self Service Using “QnABot on AWS”

Presenter: Thomas Rindfuss (Sr. Solutions Architect at Amazon Lex)

The QnABot is a state-of-the-art conversational AI tool built on the Amazon Lex platform. It’s designed to create and deploy chatbots within the AWS environment.

With a wide range of user-friendly tools and natural language processing capabilities, this LLM chatbot is a robust tool for developing language-based models.

Leveraging advancements in LLMs, this bot streamlines the training process for intent matching, enabling rapid learning and improved ability to answer a variety of questions.

Additionally, the QnABot supports over 70 languages in chat and 27 in voice channels, making it a multi-language bot. It leverages Amazon Comprehend to determine the dominant language in an input. Ultimately, the bot offers a simple, one-click deployment process and a user-friendly visual interface to manage content.

What Are Large Language Models and How Are They Trained?

Large language models (LLMs) are complex mathematical functions that are designed to map human text input to a meaningful output.

To train these models, a large dataset of text conversations is first collected. The dataset can include common expressions, questions, and in-context phrases, along with corresponding labels or answers.

Once this dataset is gathered, it undergoes preprocessing so that NLP models can understand its format. This typically involves tokenization, where the text is converted into numerical vectors, as LLMs require numeric input to function.

After tokenization, stop words (e.g. “to”, “of”, “and”) may be removed to simplify the data and improve processing efficiency.

Once the data is preprocessed, a suitable model can be selected based on the training data and project objectives. The training process involves passing in an example and a corresponding label (e.g. a phrase and its response), where the model makes weighted calculations based on the input. Initially, these outputs are likely to be incorrect, requiring a correct label to calculate a loss function.

An optimization algorithm is then applied to adjust the model’s weights and reduce the loss value. This process is repeated iteratively. Eventually, the model converges on an optimized set of weights that accurately map inputs to outputs.

Once the model has been trained, it can be used to make predictions by multiplying its output weights with new input examples.

Overall, this process can be time-consuming and complex. However, the results can be extremely powerful. It enables LLMs to understand and respond to human language in a way that was previously impossible.

What Is the Use of These LLMs for the QnABot?

The QnABot uses LLMs that are deployed in the same directory as the bot to make predictions based on user input text, without requiring the use of API calls.

User responsible for fine-tuning the model provides training questions to the LLM chatbot to set up its prediction levels. The resulting response and questions are stored in a tokenized way (in vectors of numbers) in the OpenSearch tool that Amazon has in the bot environment.

When a user enters a question, the chatbot tokenizes it and finds similar questions to group together into an “intent group.” This group, along with the user’s question, is sent to the LLM for a more accurate response.

Using the intent group, the LLM chatbot can consider contexts of the user’s question and provide a better answer. This reduces the chances of errors and misunderstandings in the chatbot’s response.

Leveraging large language models and natural language processing (NLP) technology, the LLM chatbot streamlines the training processes. As a result, the QnABot demonstrates superb capabilities with reduced costs and improved customer satisfaction, making it one of Amazon’s valuable solutions.

The Dawning of a New Era of Customer Service

Presenter: Eugene Neale (Director of Business IT and CX Engineering at loveholidays)

LLM chatbots are revolutionizing the customer service industry. This is especially so in fields that require time-consuming and arduous tasks.

Eugene Neale emphasizes how “the dawn of a new era” is coming to the travel industry. As an example, he introduced advanced chatbot interfaces like Sandy of loveholidays.

The travel industry has been previously rated to be the second worst when it came to customer service. This meant a challenge for businesses to improve it.

However, with the help of AI, travel companies such as loveholidays were able to create a more effective customer service experience.

According to Neale, the travel business is particularly an industry with a lot of room for improvement. This is due to its lengthy processes, from buying tickets to boarding a plane. Chatbots also provide a solution to the challenge of responding to common customer questions.

Factors on the Growing Impact of Conversational AI

Data is one of the key ingredients that make LLM chatbot possible. When leveraged right, data can provide streamlined CS through more personalized responses to customer inquiries.

Advanced technologies like LLMs empower the interface to offer even more engaging experience to customers. For example, let us say a customer asks an LLM chatbot a question about a product they recently purchased. The bot can quickly access the database. Next, it can generate an engaging response that is relevant to the specific product in question.

Eugene believes that our evolving mentality on interacting with computers also played a significant role in this progress. Thanks to the popularity of chatbots like ChatGPT, humans are becoming more willing to interact with machines.

The Benefits of Conversational AI

One of the biggest benefits of conversational AI is the ability to personalize the customer experience. Chatbots like Sandy at loveholidays are able to get to know customers and provide personalized responses that meet their specific needs.

Additionally, Sandy was able to significantly reduce wait time, handle time, as well as the number of avarage conversation turns by multifold. This is all the while it was increasing customer satisfaction score. It can also handle large volumes of requests simultaneously, making it easier to scale customer service efforts as needed.

The benefits of personalized, efficient, and effective customer service are clear. This is why businesses are turning to AI solutions like fine-tuned LLM chatbots to meet those needs.

The future of customer service in the field of travel industry looks bright with the help of conversational AI.

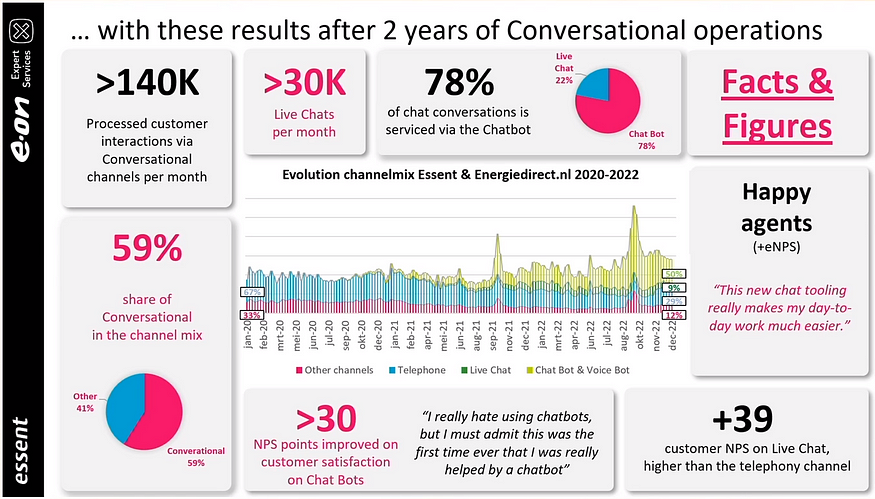

E.ON & Essent — Why and How Conversational Became Essent’s Most Important Service Channel in Just 7 Months

Presenter: Fabian Schneidereit (Delivery Manager at E.ON Digital Technology) and Jeroen Roes (Lead Conversational AI Senior Program Manager at Essent)

Energy companies, including Essent, face high demand for customer service requests every day.

According to Jeroen Roes in his presentation, Essent had relied on telephony as its main customer service tool for over 100 years. However, with increasing competition within the Dutch market and a surge in customer service requests, Essent recognized the need to transform its operations to stay ahead.

In addition to increasing operational efficiency and reducing costs, Essent saw the potential for chotbots to provide a more streamlined and personalized experience for its customers. By leveraging this new technology, Essent could better meet the growing demand for customer service while reducing its reliance on costly telephony services.

The Impact of Conversational AI on Essent’s Service Channels

In just seven months, conversational AI became Essent’s most important service channel, responsible for handling 59% of all requests. This was achieved by “using conversational AI as an enabler to achieve a broader strategic objective of digitalization,” according to Roes.

They created a dedicated division to take care of conversational AI and used the technology in all parts of the company’s operation.

Their main strategy was based on the creation of a foundational project and then optimizing this project by using bold moves: in their case, closing down the telephone lines to customer service.

A Step-by-Step Guide to Building an Integrated Conversational Capability

The success of Essent’s conversational AI strategy can be replicated by following a step-by-step guide to building an integrated conversational capability presented by Roes.

- Appoint a lead – First, it is important to appoint a lead to drive the project forward. This person will motivate growth and align the dedicated team’s mission with the company’s broader strategic objective. Building a team and partnerships that are fit for the current phase is also crucial.

- Set a goal and prioritize – To deliver results, it is essential to determine what is needed to achieve the goal and prioritize and scope accordingly. Setting milestones that have high impact and high visibility is also important to keep the team motivated. Influencing stakeholders is crucial as their numbers and influence grow, and learning from mistakes and adjusting iteratively is important.

- Keep an eye – Finally, the transition to the new system needs to be managed carefully. Structurizing and formalizing the new system is essential, and sticking to the plan, yet adjusting it as needed, will help ensure continued success.

To summarize, the success of Essent’s conversational AI strategy was achieved by aligning it with the broader customer contact strategy and building an integrated conversational capability in the organization. By creating a solid foundation through a project and then iteratively building and optimizing, Essent was able to achieve its goals and transform its customer service operations.

Coming up next:

The three enterprise examples covered above illustrate the effectiveness of engaging conversational AI models to various business sectors. These conversational AI, including LLM chatbots, when synergized with apt dataset and strategized business decision-making capacity, can bring immense positive difference for companies.

Our next article will cover the various standpoints opinions of industry leaders — from representatives of leading companies, business leaders, conversation designers, as well as researchers of the advanced AI domain.