If the concept of underfitting and overfitting confuses you, you’re not alone. (Trust us!)

Navigating machine learning terminologies can be very difficult, especially if you’ve only recently started to dig deeper.

The good news is that, as a non-expert, it’s still possible to have a grasp on what each term means using simplified examples. And this is especially so if your goal is to just familiarize yourself with some key concepts in machine learning, so that you can better appreciate the perks of AI systems.

In this article, we’ll talk about the concepts of underfitting and overfitting in machine learning. If you’ve kept a keen eye on the field, you may have heard at least one of the two. These two concepts continue to plague machine learning and data professionals, and that is perhaps why they are commonly thrown about in the field.

Let’s have a look at what underfitting and overfitting are, together with some simplified examples.

What is underfitting and overfitting in machine learning?

Underfitting and overfitting refer to a set of negative phenomena in machine learning that interfere with optimal model performance. They are often caused by a mismatch between a machine learning model and the data it’s trained on.

Even if you’re new to the term, you’ve probably already experienced minor cases of underfitting and overfitting. For instance, a voice assistant not being able to understand some voice commands at all due to certain accents can be a result of overfitting. Meanwhile, the infamous AI hallucinations can be caused by underfitting.

Performance without underfitting and overfitting

An ideal or good fit machine learning model has a good balance between capturing the patterns within the training data and generalizing well to new, unseen data. It can capture the underlying patterns and relationships present in the training data, without memorizing things word-by-word or detail-by-detail.

The model should also perform well on new, unseen data that wasn’t part of the training set. It is able to demonstrate an understanding of the underlying concepts, rather than overly relying on specific details.

A good fit model has a clearly defined scope and a dataset that clearly aligns with it. As a result, its performance might look like this.

What is underfitting in machine learning?

Underfitting occurs when a model is too simple to understand the patterns in the data.

An underfit model doesn’t learn the data well and may perform poorly on both the training set and new, unseen data because it oversimplifies the relationships in the data. Imagine a shoe that can fit anything between a human foot and a little dog because it’s too big and loose (underfitting).

Several factors can contribute to underfitting. It can be caused by a lack of, or limited data.

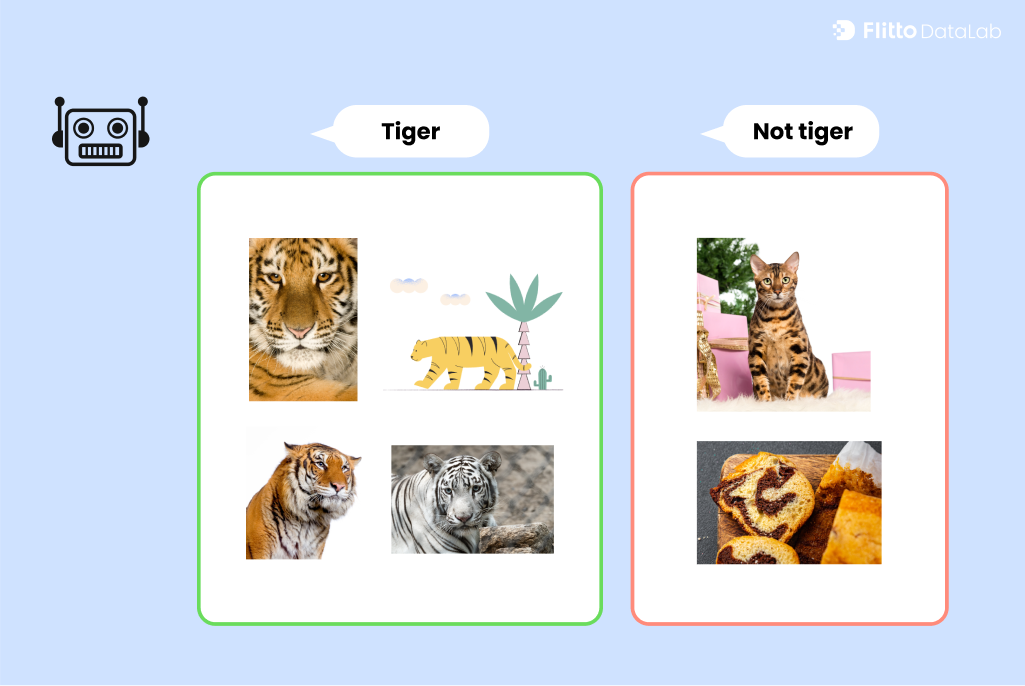

Let’s look at the performance of an underfit tiger recognition model.

In the visual example above, for instance, the model may have been built to define tiger with a single feature:

- Striped

Naturally, it gets very creative to the point where it can visualize a tiger in any image with stripes.

Causes of Underfitting

Some of the notable causes of underfitting include these factors.

- Model simplicity: The chosen model is too simple to capture the underlying patterns in the data.

- Insufficient features: The model lacks important features or attributes needed to understand the complexity of the problem.

- Limited training data: The training dataset is too small, and the model doesn’t have enough examples to learn from.

- Over-regularization: Excessive use of regularization techniques that penalize the model for complexity.

What is overfitting in machine learning?

On the other hand, overfitting happens when a model hyper-focuses on a specific set of examples. As a result, it struggles when faced with new, unseen examples.

An overfit model is trained to recognize even the unwanted noise and quirks, making it less effective at making predictions on new data. Imagine Cinderella’s glass heel that no one else could fit in.

An overfit model may look like this:

The model may have been built to recognize tigers by the following attributes:

- Striped

- Large frame

- Furry

- Orange

- Three-dimensional

Ideally, a tiger recognition model would have to recognize a white tiger or a flat tiger. In severely overfit cases, a model might even have difficulties recognizing the same tiger from its own dataset just by changing the lightings or angles.

Causes of Overfitting

- Complex model architecture: A complex model can end up memorizing the training data instead of learning general patterns.

- Overemphasis on noisy features: The model might give too much importance to noisy or irrelevant features in the training data.

- Lack of regularization: Insufficient use of regularization techniques allows the model to become too flexible.

Conclusion

Underfitting and overfitting are great examples of there being no one-size-fits-all approach in machine learning.

By now, it’s well-understood how the quality of data can make or break a model. However, there still exists a misconception that a powerful model might cancel out the need for large datasets, or that a large amount of data can be the solution to augment a simpler model. This is not the case, as the complexity and size of a model and data need to be balanced; and it’s highly challenging to do so.

That’s why it’s our goal here at Flitto DataLab to devise and construct the right dataset a model might need.

While we continue on our craft, we will continue to bring digestible bits of data and AI information for you.